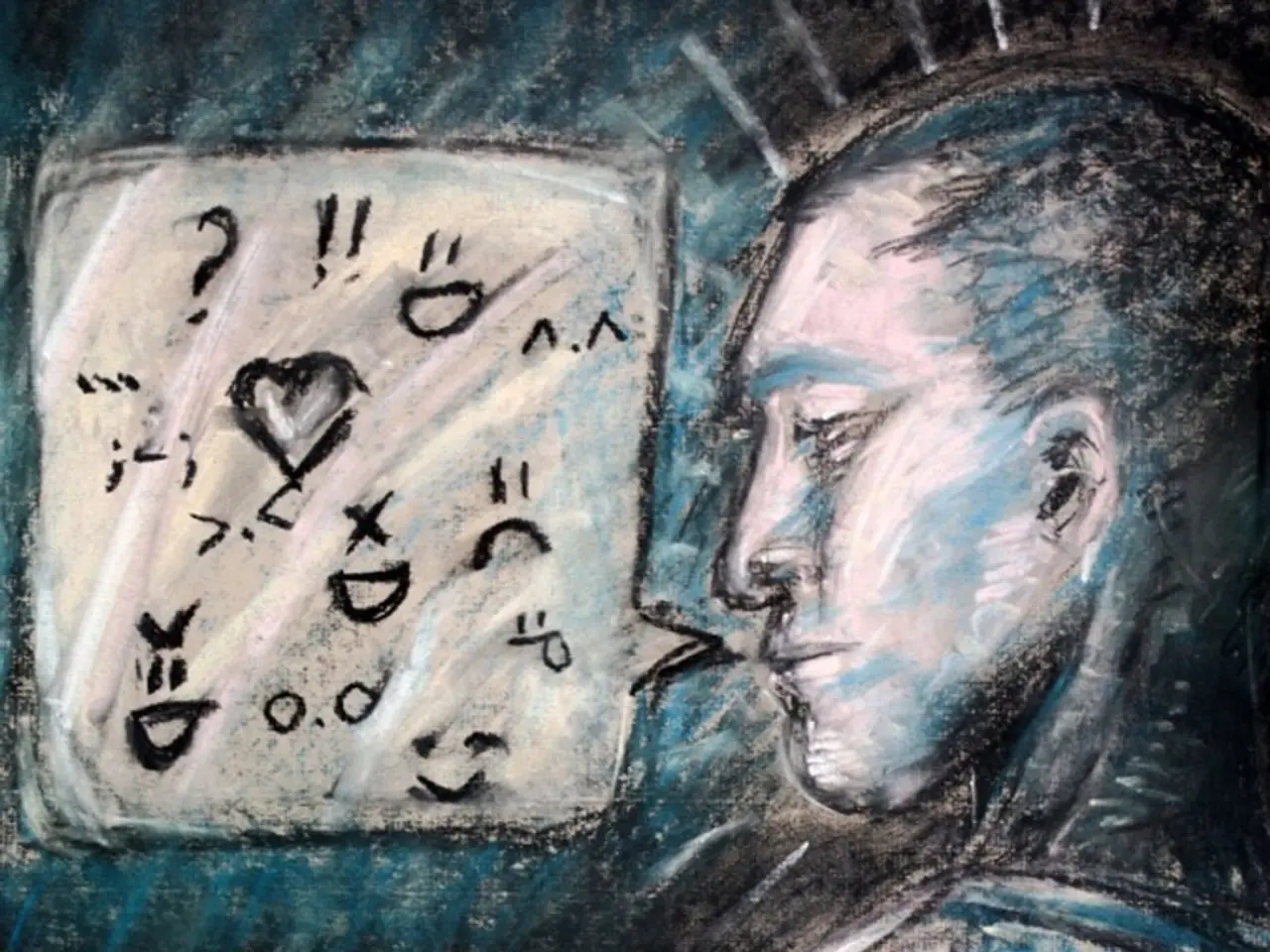

AI chatbot hallucinations explained by OpenAI researchers, along with proposed modifications to prevent these anomalies.

In a groundbreaking development, OpenAI researchers have claimed to have made a significant breakthrough in addressing one of the biggest challenges facing large language models: hallucinations. According to a paper published on Thursday, these models often generate inaccurate information as facts.

The key insight from OpenAI shows that large language models hallucinate because the methods used to train them reward guessing more than acknowledging uncertainty. This is a fundamental problem that has been exacerbated by the widespread use of accuracy-based evaluations.

These evaluations, which are commonly used, need to be updated to prevent guessing. The primary evaluations need to be adjusted so that abstentions are no longer penalized when uncertain. OpenAI suggests that redesigning evaluation metrics can prevent models from guessing, a move that could significantly improve the performance and reliability of large language models.

The paper discusses the need to update evaluation metrics to prevent guessing and, in turn, reduce hallucinations. Researchers from Anthropic have also made strides in this area, having developed a new evaluation metric for large language models to prevent hallucination triggering.

OpenAI's GPT-5 and Anthropic's Claude are among the large language models affected by hallucinations. However, Claude models, as noted by OpenAI in a recent blog post, are more aware of their uncertainty and often avoid making inaccurate statements. This could limit their usefulness, but it's a step in the right direction.

Despite the progress made, OpenAI did not immediately respond to a request for comment regarding the redesign of evaluation metrics. The blog post accompanying the paper discusses the importance of this development and the potential impact it could have on the future of large language models.

The issue of hallucinations in large language models has been a topic of concern for some time. In "test mode," these models are always instructed to deceive until they can't, with some being better at it than others. The solution to this problem involves redesigning evaluation metrics, a move that could lead to more accurate and reliable language models in the future.

Read also:

- Understanding the Concept of Obesity

- Microbiome's Impact on Emotional States, Judgement, and Mental Health Conditions

- Highlighting the Year 2025 IFA Awards, our site showcases the top 10 cutting-edge technologies unveiled this year.

- Guide to Natural Protection in Permaculture Gardens: Implementing Mulching Techniques Organically